|

I am a second-year Ph.D. student at the Department of Computer Science, The University of Hong Kong (HKU), advised by Prof. Ping Luo. Before that, I obtained my master degree from University of Science and Technology of China (USTC), advised by Prof. Yang Cao. My previous research primarily centered around generative models, with a focus on image, video, and 3D generation. Currently, my interests have shifted towards native multimodal models. I am also actively exploring long-context modeling, an area where I am currently an enthusiastic beginner. I am always open to research discussions and collaborations; please feel free to contact me via email (zhihengl0528 AT connect.hku.hk).

|

|

|

|

(*: Equal contribution) |

|

Zhiheng Liu, Weiming Ren*, Haozhe Liu, Zijian Zhou, Shoufa Chen, Haonan Qiu, Xiaoke Huang, Zhaochong An, Fanny Yang, Aditya Patel, Viktar Atliha, Tony Ng, Xiao Han, Chuyan Zhu, Chenyang Zhang, Ding Liu, Juan-Manuel Perez-Rua, Sen He, Jürgen Schmidhuber, Wenhu Chen, Ping Luo, Wei Liu, Tao Xiang, Jonas Schult, Yuren Cong CVPR, 2026 pdf/ page This work introduces Tuna, a native Unified Multimodal Model (UMM) that builds a continuous visual representation space for end-to-end image and video processing. Key highlights include:

|

|

Zhiheng Liu, Xueqing Deng, Shoufa Chen, Angtian Wang, Qiushan Guo, Mingfei Han, Zeyue Xue, Mengzhao Chen, Ping Luo, Linjie Yang NeurIPS, 2025 pdf/ page WorldWeaver is a framework for long-horizon video generation that unifies RGB and perceptual conditions, leveraging depth-guided memory and segmented noise scheduling to enhance structural and temporal consistency. |

|

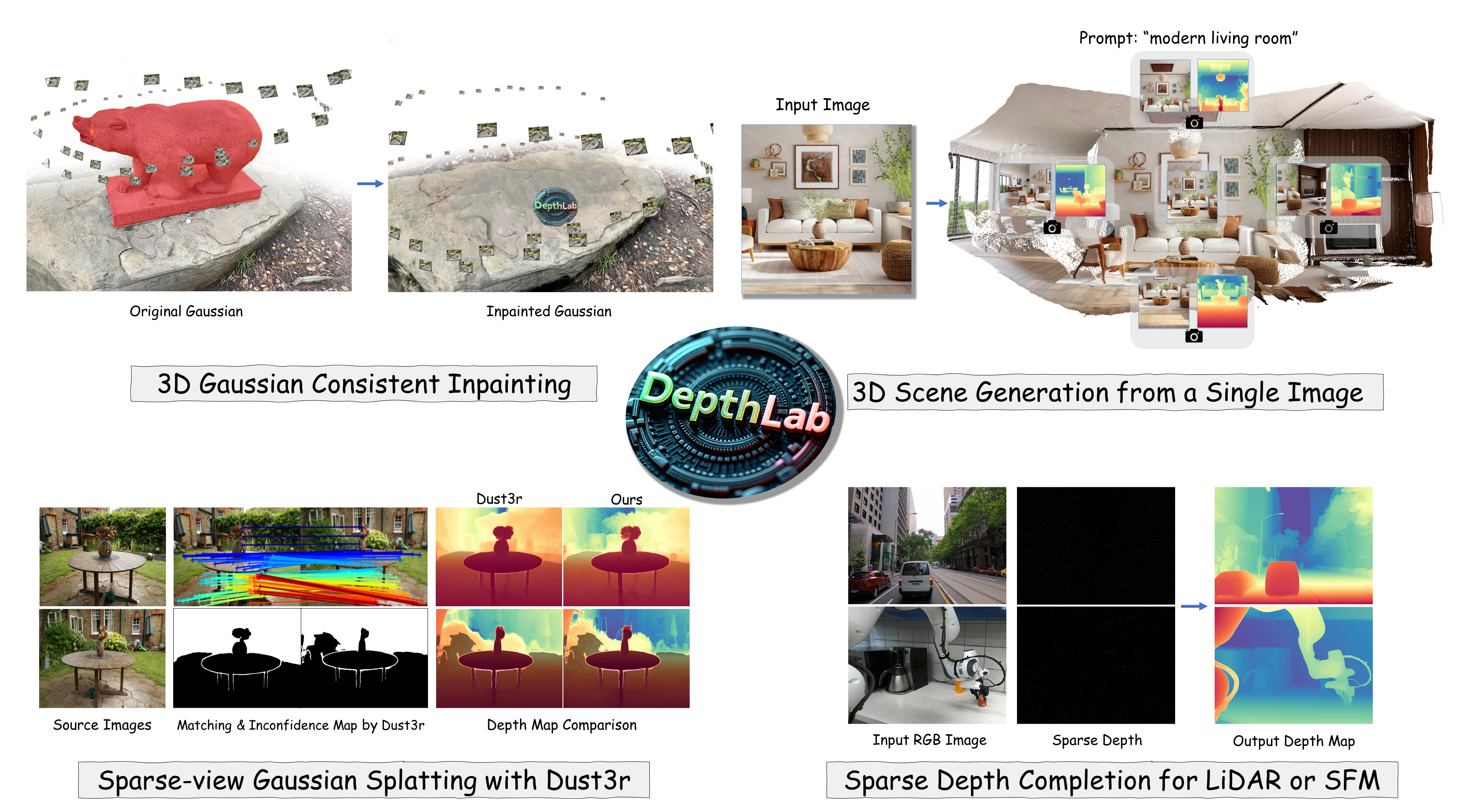

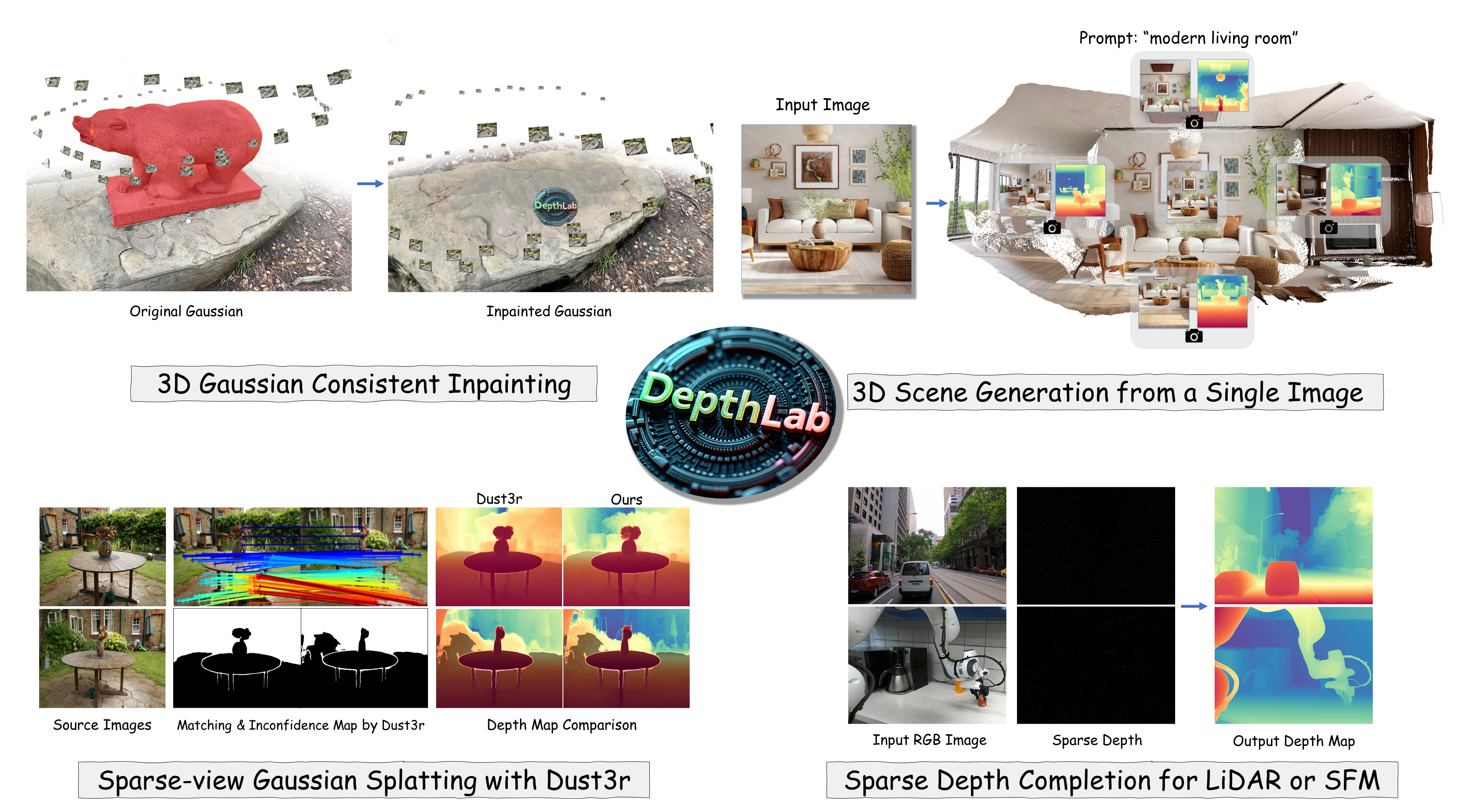

Zhiheng Liu*, Ka Leong Cheng*, Qiuyu Wang, Shuzhe Wang, Hao Ouyang, Bin Tan, Kai Zhu, Yujun Shen, Qicheng Chen, Ping Luo arxiv, 2024 pdf/ page We propose a robust depth inpainting foundation model that can be applied to various downstream tasks to enhance performance. |

|

Zhiheng Liu*, Hao Ouyang*, Qiuyu Wang, Ka Leong Cheng, Jie Xiao, Kai Zhu, Nan Xue, Yu Liu, Yujun Shen, Yang Cao arxiv, 2024 pdf/ page We present an image-conditioned depth inpainting model, which uses the diffusion prior to inpaint 3D Gaussians and has very good geometric and texture consistency. |

|

Zhiheng Liu*, Ka Leong Cheng*, Xi Chen, Jie Xiao, Hao Ouyang, Kai Zhu, Yu Liu, Yujun Shen, Qicheng Chen, Ping Luo CVPR, 2025 Highlight pdf/ page MangaNinja is a reference-based line art colorization method that enables precise matching and fine-grained interactive control. |

|

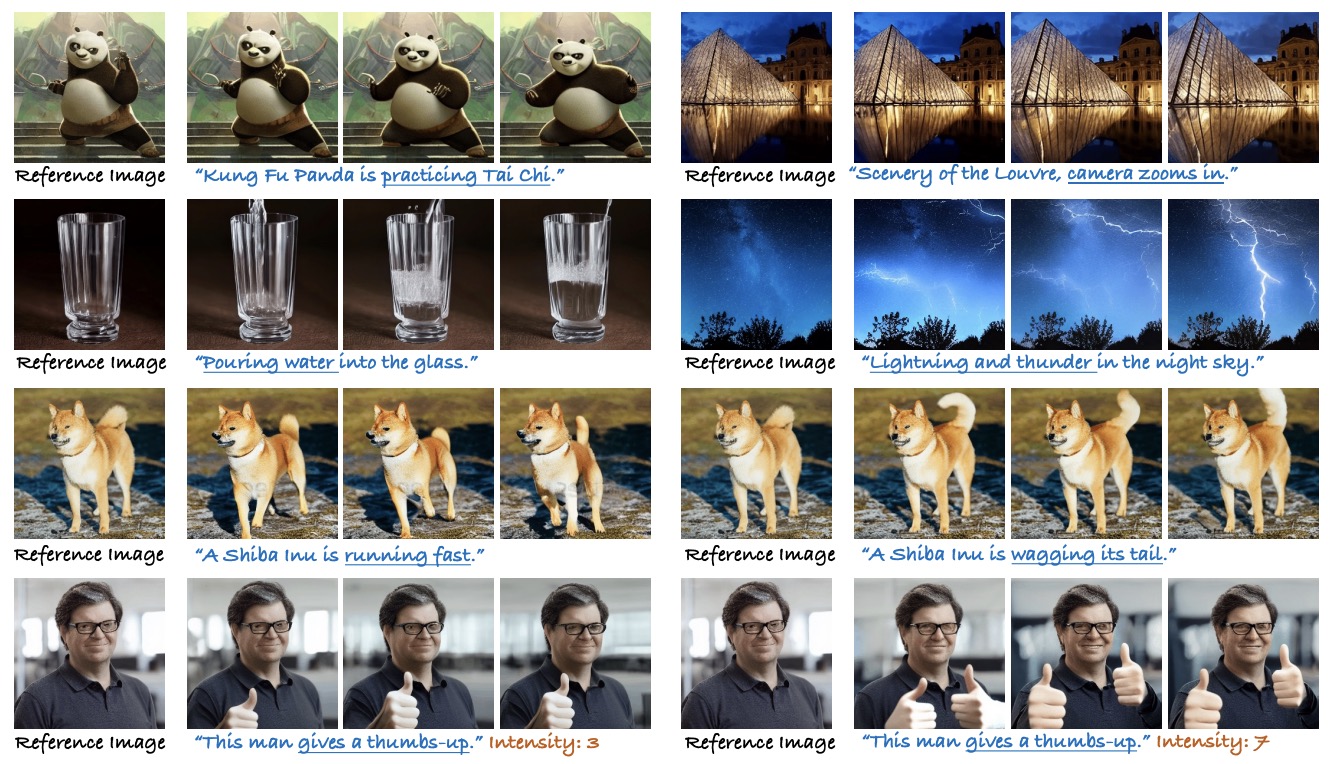

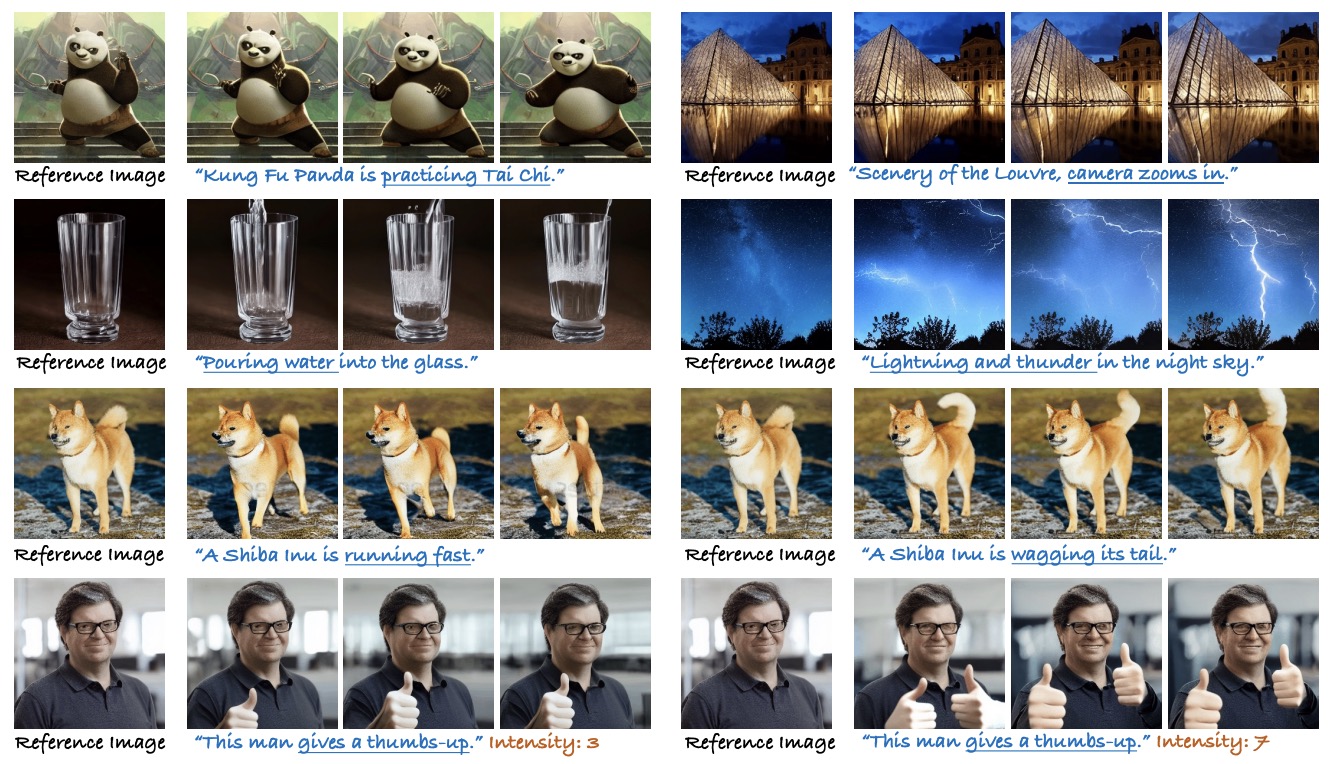

Xi Chen, Zhiheng Liu, Mengting Chen, Yutong Feng, Yu Liu, Yujun Shen, Hengshuang Zhao ECCV, 2024 pdf/ page We present LivePhoto, a real image animation method with text control. Different from previous works, LivePhoto truely listens to the text instructions and well preserves the object-ID. |

|

Zhiheng Liu*, Yifei Zhang*, Yujun Shen, Kecheng Zheng, Kai Zhu, Ruili Feng, Yu Liu, Deli Zhao, Jingren Zhou, Yang Cao NeurIPS, 2023 pdf / page Cones 2 uses a simple yet effective representation to register a subject. The storage space required for each subject is approximately 5 KB. Moreover, Cones 2 allows for the flexible composition of various subjects without any model tuning. |

|

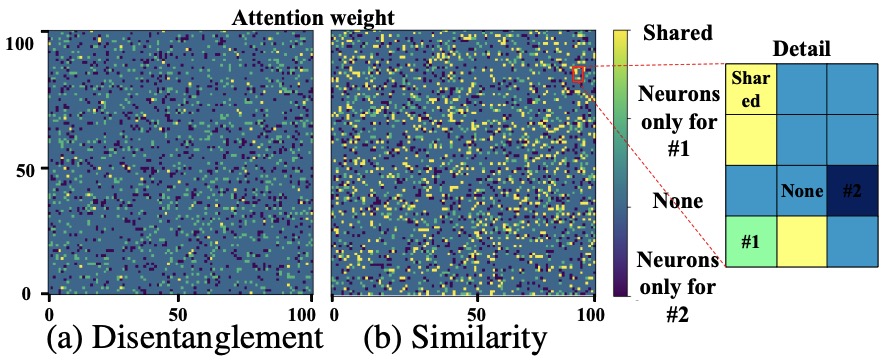

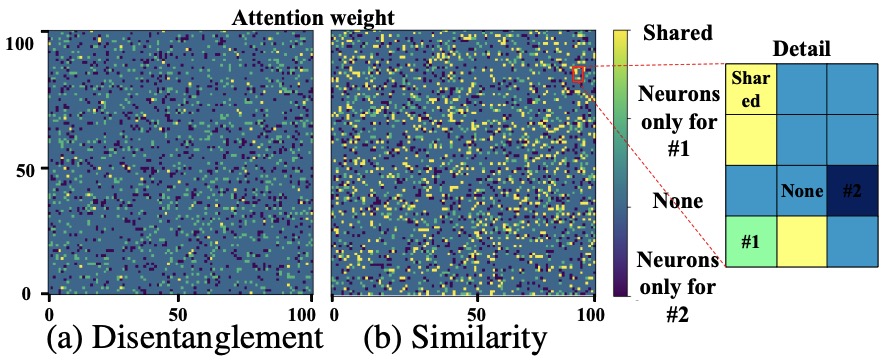

Zhiheng Liu*, Ruili Feng*, Kai Zhu, Yifei Zhang, Kecheng Zheng, Yu Liu, Deli Zhao, Jingren Zhou, Yang Cao ICML, 2023 Oral pdf / page We explore the subject-specific concept neurons in a pre-trained text-to-image diffusion model. Concatenating multiple clusters of concept neurons representing different persons, objects, and backgrounds can flexibly generate all related concepts in a single image. |

|

Design and source code from Jon Barron's website |